Introduction

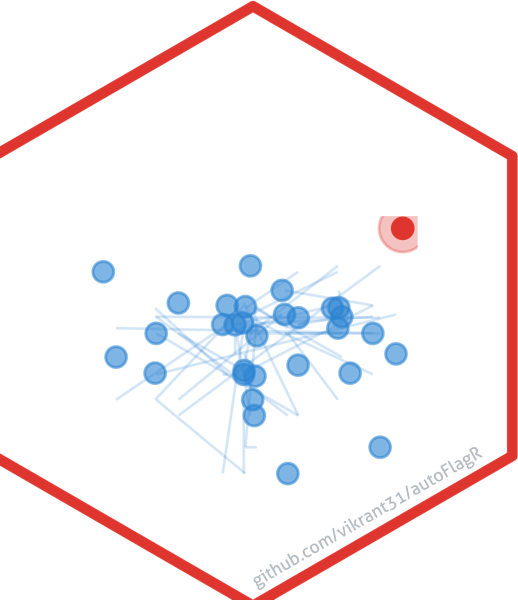

autoFlagR is an R package for automated data quality

auditing using unsupervised machine learning. It provides AI-driven

anomaly detection for data quality assessment, primarily designed for

Electronic Health Records (EHR) data, with benchmarking capabilities for

validation and publication.

Basic Workflow

The typical workflow consists of three main steps:

- Preprocess your data

- Score anomalies using AI algorithms

- Flag top anomalies for review

Step 2: Prepare Your Data

The prep_for_anomaly() function automatically handles: -

Identifier columns (patient_id, encounter_id, etc.) - Missing value

imputation - Numerical feature scaling (MAD or min-max) - Categorical

variable encoding (one-hot)

# Example healthcare data

data <- data.frame(

patient_id = 1:200,

age = rnorm(200, 50, 15),

cost = rnorm(200, 10000, 5000),

length_of_stay = rpois(200, 5),

gender = sample(c("M", "F"), 200, replace = TRUE),

diagnosis = sample(c("A", "B", "C"), 200, replace = TRUE)

)

# Introduce some anomalies

data$cost[1:5] <- data$cost[1:5] * 20 # Unusually high costs

data$age[6:8] <- c(200, 180, 190) # Impossible ages

# Prepare data for anomaly detection

prepared <- prep_for_anomaly(data, id_cols = "patient_id")Step 3: Score Anomalies

Use either Isolation Forest (default) or Local Outlier Factor (LOF):

# Score anomalies using Isolation Forest

scored_data <- score_anomaly(

data,

method = "iforest",

contamination = 0.05

)

# View anomaly scores

head(scored_data[, c("patient_id", "anomaly_score")], 10)

#> patient_id anomaly_score

#> 1 1 0.13311290

#> 2 2 0.00000000

#> 3 3 0.02017313

#> 4 4 0.07379963

#> 5 5 0.35066988

#> 6 6 0.19438446

#> 7 7 0.25554936

#> 8 8 0.20328744

#> 9 9 0.72285379

#> 10 10 0.55022196Step 4: Flag Top Anomalies

Flag records as anomalous based on threshold or contamination rate:

# Flag top anomalies

flagged_data <- flag_top_anomalies(

scored_data,

contamination = 0.05

)

# View flagged anomalies

anomalies <- flagged_data[flagged_data$is_anomaly, ]

head(anomalies[, c("patient_id", "anomaly_score", "is_anomaly")], 10)

#> patient_id anomaly_score is_anomaly

#> 24 24 0.9515207 TRUE

#> 36 36 0.9757864 TRUE

#> 48 48 0.9658148 TRUE

#> 115 115 0.9977989 TRUE

#> 127 127 0.9491570 TRUE

#> 130 130 0.9771116 TRUE

#> 141 141 0.9522229 TRUE

#> 151 151 0.9684421 TRUE

#> 168 168 0.9556123 TRUE

#> 185 185 1.0000000 TRUEStep 5: Generate Audit Report

Generate comprehensive PDF, HTML, or DOCX reports:

# Generate PDF report (saves to tempdir() by default)

generate_audit_report(

data,

filename = "my_audit_report",

output_dir = tempdir(),

output_format = "pdf",

method = "iforest",

contamination = 0.05

)Key Features

- Automated Preprocessing: Handles identifiers, scales numerical features, and encodes categorical variables

- Multiple AI Algorithms: Supports Isolation Forest and Local Outlier Factor (LOF) methods

- Benchmarking Metrics: Calculates AUC-ROC, AUC-PR, and Top-K Recall when ground truth labels are available

- Professional Reports: Generates PDF/HTML/DOCX reports with visualizations and prioritized audit listings

- Tidy Interface: Designed to work seamlessly with the tidyverse

Next Steps

- See the Healthcare Example vignette for a detailed walkthrough

- Learn about Benchmarking with ground truth labels

- Explore the Function Reference for detailed documentation